- Sep 3, 2024

- 15 minutes

The AI landscape is in a constant state of evolution, driven by rapid advancements and innovations. While the introduction of accessible LLMs promised enhanced efficiency, new business models and competitive advantages, the path to their adoption is fraught with challenges. This chapter delves into the complexities and hurdles organisations face when integrating new technologies and highlights a path towards fully adopting LLMs and bringing out all the value they possibly can offer.

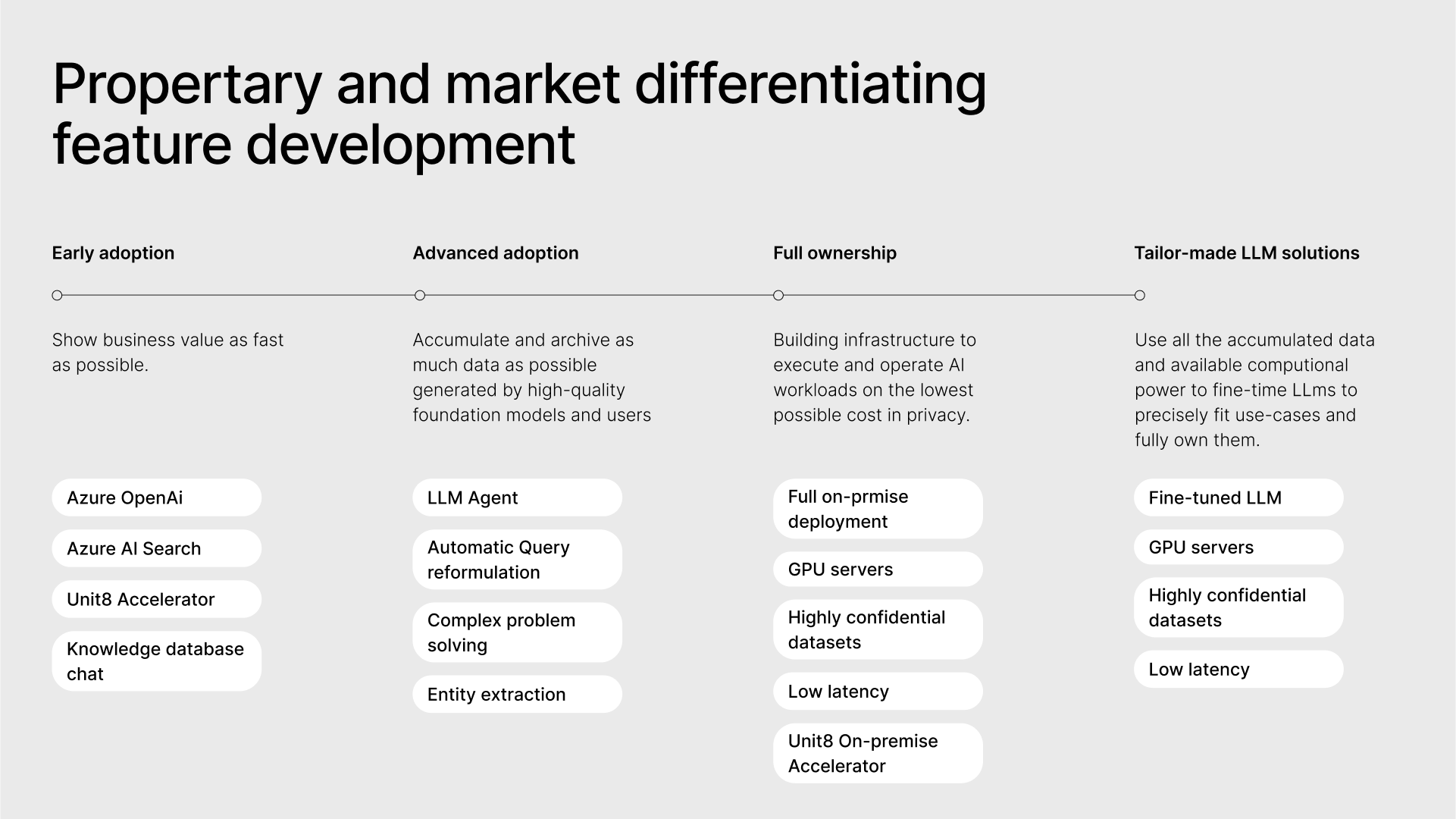

Four stages of LLM adoption and milestone goals.

1. Early Adoption

While most people are excited about AI, one of the most significant barriers to adopting new technologies is resistance to change. This resistance can stem from various sources, including employees, management, stakeholders and even the organizational culture itself. The best way to push through this initial resistance is to find a simple and elegant but practically useful use case that integrates an LLM. It can be prototyped and shipped quickly with low initial costs using any cloud vendor in a pay-per-use manner.

A prominent use case is the implementation of Document Database Chat through a simple Retrieval-Augmented Generation (RAG) model. The benefits of such a system are clear. Document repositories, such as Microsoft SharePoint and network drives filled with PDFs, often present significant challenges in terms of searchability. This is due to the lack of an integrated solution or the inadequacy of existing search functionalities. By leveraging a RAG model, organizations can enhance their ability to search and retrieve information efficiently from these vast and often unstructured data stores. Implementing such a solution shows the users how LLMs can provide an interface that everybody can naturally use and brings immediate value to the users by enabling discoverability to document stores where it was difficult to find anything before.

This quick victory, possible just in 6 weeks with the Unit8 accelerator program, will bring the necessary first success, that leveraged one can get the necessary support from within the organization to propel LLM adoption to the next phase.

2. Advanced Adoption

At the time of writing this article (summer 2024) plenty of early adopter enterprises finished their first LLM application with success resulting in an immediate push from stakeholders for more. While building a chat app is fairly simple with the open-source tooling lying around, both from a technical and added value perspective, taking it to the next level and finding new valuable use cases can be challenging. While there are no one-size-fits-all recipes out there, there are plenty of ideas worth looking at.

- Entity Extraction

Processing unstructured data, such as lengthy texts, is a time-consuming task for humans, who read at an average speed of 200 words per minute. The challenge lies in extracting the required data from these texts and converting it into a structured or semi-structured format suitable for computer processing and storage. This process is not only labor-intensive but also repetitive and prone to errors.

Large Language Models (LLMs) provide a solution by automating the extraction of complex entities from texts. However, it is crucial to ensure that critical information is not missed and that hallucinations (incorrect or fabricated information) are minimized. Although a significant portion of the process can be automated, human oversight remains necessary. A human reviewer must check the LLM-extracted results, correct any mistakes, and push the data to a database through established workflows.

Unit8 has successfully implemented this approach for an asset management company. By automating the extraction of data from due diligence documents, which are essential for investment decisions, they reduced the workload on employees by over 50%.

- Agent supported assistants

While chatting is natural to everyone the underlying technology, whether it is a vector database, API or just LLM itself needs proper formulation of a request. For a technology adopted by such a wide audience in terms of technical familiarity we cannot expect each user to learn proper prompt engineering skills. Luckily, one of the things that LLMs do exceptionally well is reformulating text which we can use to help the user write proper search queries.

There are various techniques such as ReAct [2] that can turn our LLM model into an agent which continuously loops, reasons and works on the user input will help the users achieve whatever mission they are on. Attaching such an agent to a RAG chat lets the user talk more naturally to the assistant; it will easily solve vague but from a machine perspective complex instructions like “Summarize all these points” prompting the agent to go all the list elements and pull relevant information one-by-one.

- Deep integration into applications

Deep integration of LLMs into an application offers extensive benefits beyond a simple LLM-powered chatbot. Innovative use cases like LLM-powered onboarding enhance functionality by automating complex tasks, personalizing user interactions, and adding content generation. It works by the application providing real-time context to the LLM, while it works in the background returning context to the UI. This integration improves user experience through natural language interfaces and proactive assistance while also lowering the barrier for adoption for the everyday users as the LLM-based feature will fit exactly to the user’s workflow. Additionally, it provides a competitive edge by enabling innovative features, scalability, and adaptability to future advancements, thus significantly boosting the application’s overall efficiency and market position.

Organizational goals

At this point, there is a high possibility that multiple teams are pushing the agenda inside the organization. While some goals are fairly obvious and standard at this stage of adoption of new technologies, like making it easily available and integrated into the enterprise ecosystem, for example in terms of monitoring and access control, other agendas are specific for LLM adoption and it is easy to overlook them but can yield huge results in a later stage with little effort in the present.

One of the most critical aspects of this process is the collection of training data. At this stage, substantial traffic is being directed through the chosen LLM vendor. While large foundation models are costly to operate at scale, they generate highly valuable data. Although this data is already benefiting the organization, capturing and storing it for future use can unlock additional value. As use cases become more complex and traffic continues to grow, fine-tuning will be essential for refining features and stabilizing the LLM’s performance in edge cases. In this context, large volumes of training data will be the key resource.

3. Full ownership

While the most prevalent adoption pattern across enterprises is using SaaS LLM vendor lots of organisations stop there on their journey thus missing out on opportunities which are too expensive, not working with out-of-the-box or not safe enough to be implemented using public SaaS LLMs.

Bringing AI capability on-premise is not just a good bet for the future of LLMs but possibly secures the infrastructure for any future advancements in artificial neural networks. Any organization that already uses traditional servers can adapt GPU-capable servers as well. Modern orchestration software like Kubernetes and Docker already supports GPUs natively which makes the deployment of GPU-requiring workloads similar to those without one, but more on that in the next chapter.

It’s important to note that moving to an on-premise solution may not be suitable for every organization. Each case varies based on factors such as size, growth trajectory, speed, legal requirements, and available resources. Therefore, organizations should carefully evaluate whether this is a worthwhile investment and explore their available options. While this topic could be covered in greater detail, it’s worth mentioning that several technologies can address specific requirements. For instance, implementing data-at-rest encryption with an offsite key vault can help alleviate privacy concerns for stakeholders.

That said, choosing not to go on-premise does not prevent an organization from adopting this technology. Although some features, such as certain fine-tuning methods, may not be accessible through SaaS LLM vendors, cloud providers are continually enhancing their offerings. For example, Google recently announced the availability of serverless GPU hosting in its Cloud Run service, which further expands the range of cloud-based options [3].

4. Building tailor-made LLM solution

While everyone is using the same hosted LLM providers, transitively, will have similar advantages and disadvantages. While bringing the solution on-premise already unlocked previously cost-constrained business models, fine-tuning LLMs can further unlock solutions which previously were not implementable with vanilla models.

You can train a model to interpret any textual format that the vendor may not have anticipated, or guide the model to focus on and extract the most relevant information for your specific use case. Another option is to consolidate multiple LLM call steps into a single one by editing training data and training on it, reducing latency and costs while delivering an improved user experience. The possibilities are virtually limitless.

Now, the careful planning pays off as collected history comes back into play. The organisation already paid for the generation with high-quality LLMs and now that the organisation is ready for LLM fine-tuning as the infrastructure is deployed, it can use those resources to bake in the high-quality training data into models, open-source of SaaS. The most supported method is called supervised fine-tuning which is the most effective way to customise LLMs. Tests show that an LLM fine-tuned to a specific task can get as good as, or even in some cases outperform a one-size bigger model. Take a 70B LLama3 model and thousand sessions of ChatGPT 4o generated summarisation and data retrieval dataset, curate the dataset removing bad examples and fixing behaviour that you don’t like and you will have your own LLM model that specialises in RAG while possibly understanding the intricacies of your domain.

Another added value is with the fine-tuning process you will teach the model a lot of the formatting and behavioural constraints that you fed it with prompting before, making a large part of the initial system prompt unnecessary which will further increase the performance of your model and decrease overall cost. This process also will help ensure that your LLM maintains consistent behaviour in edge cases, such as when the context becomes more complex or the user deviates from the intended path—scenarios that are likely to occur frequently as the project reaches a more advanced stage.

Conclusion

In conclusion, the journey towards fully adopting large language models (LLMs) is intricate, presenting both significant challenges and substantial opportunities. As organizations move to more advanced stages, the integration of sophisticated features like agent-supported assistants can further enhance the value derived from LLMs. The strategic collection and utilization of high-quality training data during these stages set the foundation for future fine-tuning and cost-efficiency improvements. Ultimately, achieving full ownership and building tailor-made LLM solutions empower organizations to leverage AI capabilities securely and cost-effectively, unlocking unique advantages and fostering innovation. By systematically navigating each phase of the LLM adoption lifecycle, enterprises can not only harness the current benefits of AI but also position themselves to capitalize on future advancements in artificial neural networks.

[1] Turbocharging LLama2 by Perplexity AI: https://www.perplexity.ai/hub/blog/turbocharging-llama-2-70b-with-nvidia-h100

[2] ReAct: Synergizing Reasoning and Acting in Language Models https://arxiv.org/abs/2210.03629

[3] Run your AI inference applications on Cloud Run with NVIDIA GPUshttps://cloud.google.com/blog/products/application-development/run-your-ai-inference-applications-on-cloud-run-with-nvidia-gpus