- May 19, 2025

- 10 minutes

-

Marc Vendramet

Marc Vendramet -

Thibaut Paschal

Thibaut Paschal -

Caroline Adam

Caroline Adam

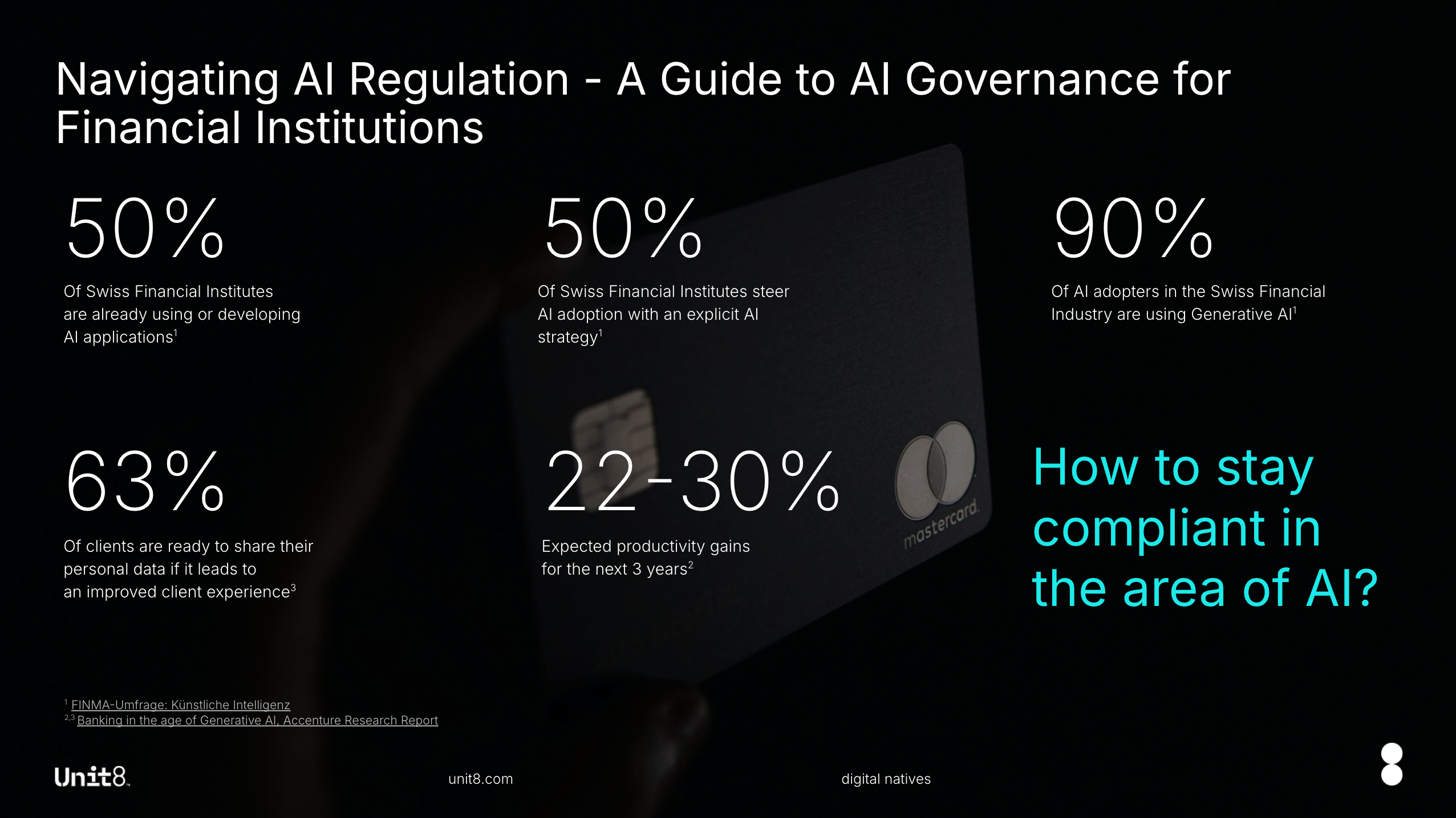

The recent FINMA survey with 400+ Swiss Financial Services companies [1] confirms what Unit8 already observes on the market: Artificial Intelligence is rapidly being adopted by Financial institutions across the country:

- Around 50% of respondents are already using AI or developing applications, with significantly more adoption underway

- Over 90% of AI adopters are using Generative AI within their company

- Only 50% steer AI adoption with an explicit AI strategy

While this is another indicator that Artificial Intelligence is transforming the financial industry by offering opportunities to enhance decision-making, elevate customer experiences, and streamline operations, these advancements also bring challenges that require careful management to ensure AI is used responsibly, ethically, and in compliance with regulations.

In Switzerland, financial institutions are primarily guided by the FINMA Guidance 08/2024 [2], which outlines expectations for governance and risk management when deploying AI technologies. In addition, the Federal Office for Communication (BAKOM) released their official proposal for AI regulation to the Swiss Federal Council in February [3]. Based on this overview, the Swiss Federal Council communicated to regulate AI in a way that its potential can be used to strengthen Switzerland as a location for business and innovation and that a primarily sector-specific approach for regulation is to be taken [4].

Meanwhile, the EU AI Act provides a comprehensive regulatory framework for organizations operating in the EU, including those in the financial sector. Financial institutions must establish strong AI governance to meet these evolving regulatory demands and uphold ethical standards.

This article consolidates Unit8’s perspectives on AI governance, derived from workshops conducted with our clients, leading Swiss financial institutions. We provide an overview of the FINMA guidance and the EU AI Act and their implications for financial institutions, before exploring strategies for developing AI governance to effectively manage risks.

What are the key principles and considerations of FINMA’s recent guidance on AI Governance and Risk Management?

In December 2024, FINMA, the Swiss Financial Market Supervisory Authority, issued guidance outlining its expectations for AI governance and risk management for financial institutions. The guidance advocates a risk-based approach, encouraging institutions to proactively address AI-related risks.

FINMA highlights several critical risks, including model risks such as bias, lack of explainability, or robustness; operational risks such as IT and cyber risks; third-party risks; and legal and reputational risks.

To tackle these risks, FINMA proposes several measures for financial institutions to implement:

- Establish a governance framework with clear responsibilities and integrate AI risk management into existing governance structures.

- Maintain a centralized inventory of all AI applications, including risk classification based on materiality and likelihood of risk occurrence.

- Ensure the quality of data and models used in AI systems to prevent inaccuracies and biases.

- Implement robust testing and continuous monitoring of AI systems to detect and mitigate potential risks.

- Provide adequate documentation and ensure AI outputs are explainable to foster transparency and trust.

- Conduct independent reviews to validate AI systems and their risk management processes.

FINMA’s guidance reflects a technology-neutral, principles-based approach, encouraging institutions to integrate AI risk management into their operations to ensure security and stability as AI adoption increases. As the guidance outlines supervisory expectations rather than strict rules, it is crucial for financial institutions to demonstrate a proactive and well-considered approach to AI risk management that aligns with FINMA’s principles.

How does the EU AI Act impact financial institutions?

The EU AI Act introduces a comprehensive regulatory framework for AI across Europe, impacting financial institutions through its extraterritorial reach. Any institution using AI outputs in the EU, regardless of its location, is affected. This means Swiss companies with users or operations in the EU must prepare to comply with this binding regulation, which, unlike FINMA’s guidance, imposes strict requirements and penalties for non-compliance.

Like FINMA, the EU AI Act adopts a risk-based approach, regulating AI systems based on their risk levels. It imposes stringent requirements for high-risk applications, including those used in credit scoring and insurance risk assessment. For these applications, the Act introduces specific obligations, including risk management systems, data governance, technical documentation, transparency, human oversight, and cybersecurity measures. Certain AI practices, deemed to carry unacceptable risk, are prohibited.

Companies must meet key deadlines under the EU AI Act, with initial obligations around prohibited AI practices and AI literacy for employees starting in February 2025. As more provisions become applicable, particularly for high-risk systems by August 2026, institutions must proactively begin preparing to ensure compliance.

How companies can navigate regulation through effective AI Governance

Companies should focus on creating comprehensive policies, processes, and ethical frameworks that guide the responsible development and use of AI. This not only ensures to meet the requirements of both FINMA and the EU AI Act, but also fosters innovation and AI adoption by reducing uncertainty and providing guidance for your internal teams. As AI continues to evolve, a flexible and adaptable framework is essential for effective risk management.

Unit8 proposes a holistic approach centered on three core pillars: Processes, People, and Technology.

Processes: To effectively govern AI systems, organizations must implement processes to map, measure, and manage AI risks. This involves establishing controls to govern risks across the organization, identifying high-risk use cases, and assessing third-party software. On an individual use case level, mapping, measuring, and monitoring risks specific to each AI application is critical. By prioritizing resources based on risk levels and integrating AI risk management into existing processes, companies can achieve more efficient and targeted outcomes.

People: Organizations must define clear roles and responsibilities, foster a culture of AI literacy and ethical awareness, and establish structures that promote collaboration and accountability. Key roles such as Model Owners, Stewards and Auditors, and collaboration across diverse profiles help identify and mitigate AI-related risks. Organizations frequently establish AI Sounding Boards, which consist of individuals with diverse backgrounds and levels of experience, to guarantee the ethical and compliant deployment of AI at the organizational level. Investing in training programs equips the workforce with the skills needed to navigate the ethical and legal challenges of AI.

Technology: Tools and technology are essential to support the implementation of AI governance. Identifying and managing high-risk AI solutions centrally is crucial. Model catalogs provide an overview of AI models, their risk levels, and compliance requirements. With high-risk solutions identified, risk assessment methodologies can be applied to document specific risks and define mitigation measures. Lastly, comprehensive monitoring and logging systems for AI activity and AI-supported processes are critical for traceability and risk mitigation. Technology can also support your governance processes with dedicated tools for monitoring the changing regulation landscape or open-source databases like MITRE Atlas (https://atlas.mitre.org) that collect adversary techniques against AI systems based on real-world attack observations.

To prepare for FINMA and other AI regulations, companies should take a stepwise approach. First, establish a comprehensive inventory of AI use cases, including those from third-party vendors, and define their scope and risk category. Second, extend your existing risk evaluation framework to cover AI risks and support the risk classification of AI solutions – ideally already in accordance with the EU AI Act risk categories. Third, enhance AI literacy among employees, emphasizing the importance of understanding AI risks and compliance. Fourth, assess and mitigate risks associated with high-risk AI applications. Finally, ensure the sustainability of AI governance by aligning processes with strategic priorities and developing a comprehensive AI risk management framework.

In conclusion, as AI transforms the financial sector, establishing robust AI governance frameworks is essential for regulatory compliance. By adopting a proactive approach to AI risk management, financial institutions can not only meet regulatory requirements and mitigate risks, but also enhance customer trust and gain a competitive edge. Ultimately, effective AI governance is not just about compliance, it is about driving responsible innovation and ensuring sustainable growth at scale in an increasingly AI-driven world.

Sources:

[1] 25.04.2025 – FINMA-Umfrage: Künstliche Intelligenz auf dem Vormarsch in Schweizer Finanzinstituten https://www.finma.ch/de/news/2025/04/20250424-mm-umfrage-ki/

[2] 18.12.2024 – FINMA guidance on governance and risk management when using artificial intelligence https://www.finma.ch/en/news/2024/12/20241218-mm-finma-am-08-24/

[3] 12.02.2025 – Overview of artificial intelligence regulation Report to the Federal Council https://www.bakom.admin.ch/bakom/en/homepage/digital-switzerland-and-internet/strategie-digitale-schweiz/ai.html

[4] 12.02.2025 – AI regulation: Federal Council to ratify Council of Europe Convention https://www.news.admin.ch/en/nsb?id=104110